Project Information

- Category: Web App Development & Deep Learning

- Personal Project

- Technology Used: Reinforcement Learning (Q-Learning), Deep Neural Networks, CUDA.jit, Python, Flask, PyTorch, Amazon Web Services (AWS)

- Date: September 2021 - March 2025

- Github (AlphaFour): github/Alpha_Four

Project Overview

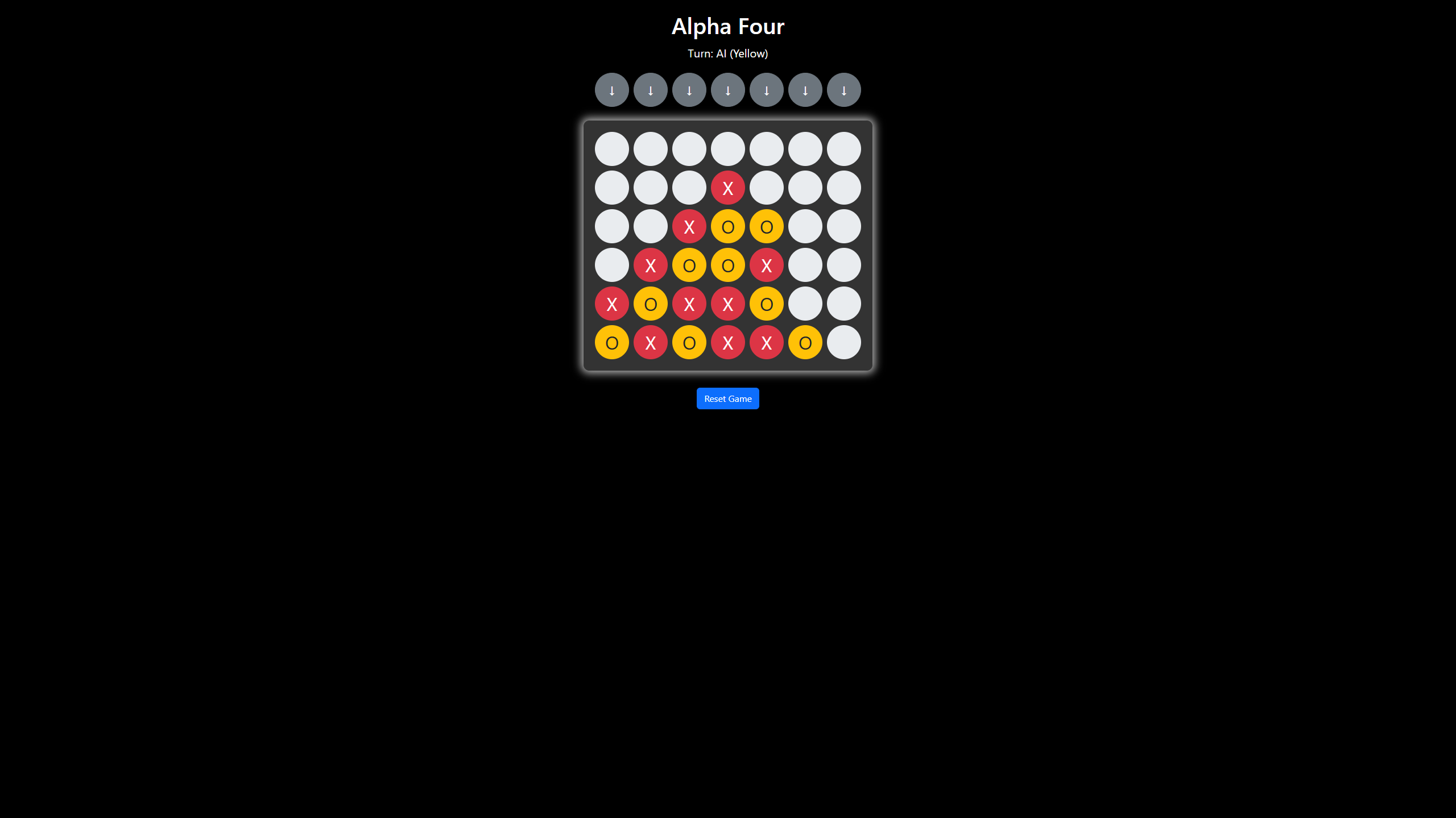

This project centers on developing Connect Four game featuring an intelligent, adaptive opponent. The standout innovation is the hybrid reinforcement learning system that fuses Deep Q-Networks (DQN) with an optimized, dynamic Monte Carlo Tree Search (MCTS), delivering both rapid state evaluation and deep, robust decision-making.

Highlights include:

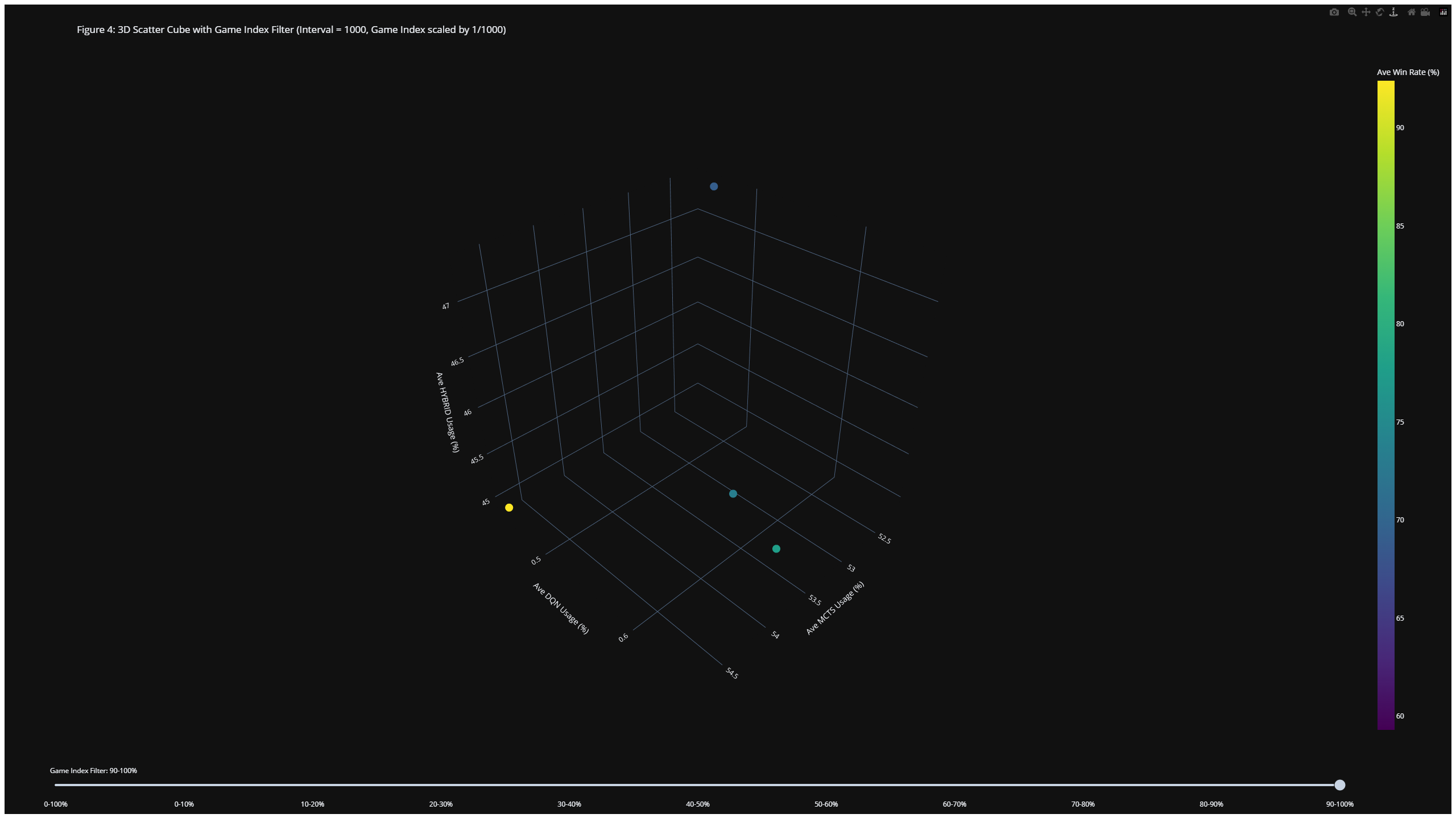

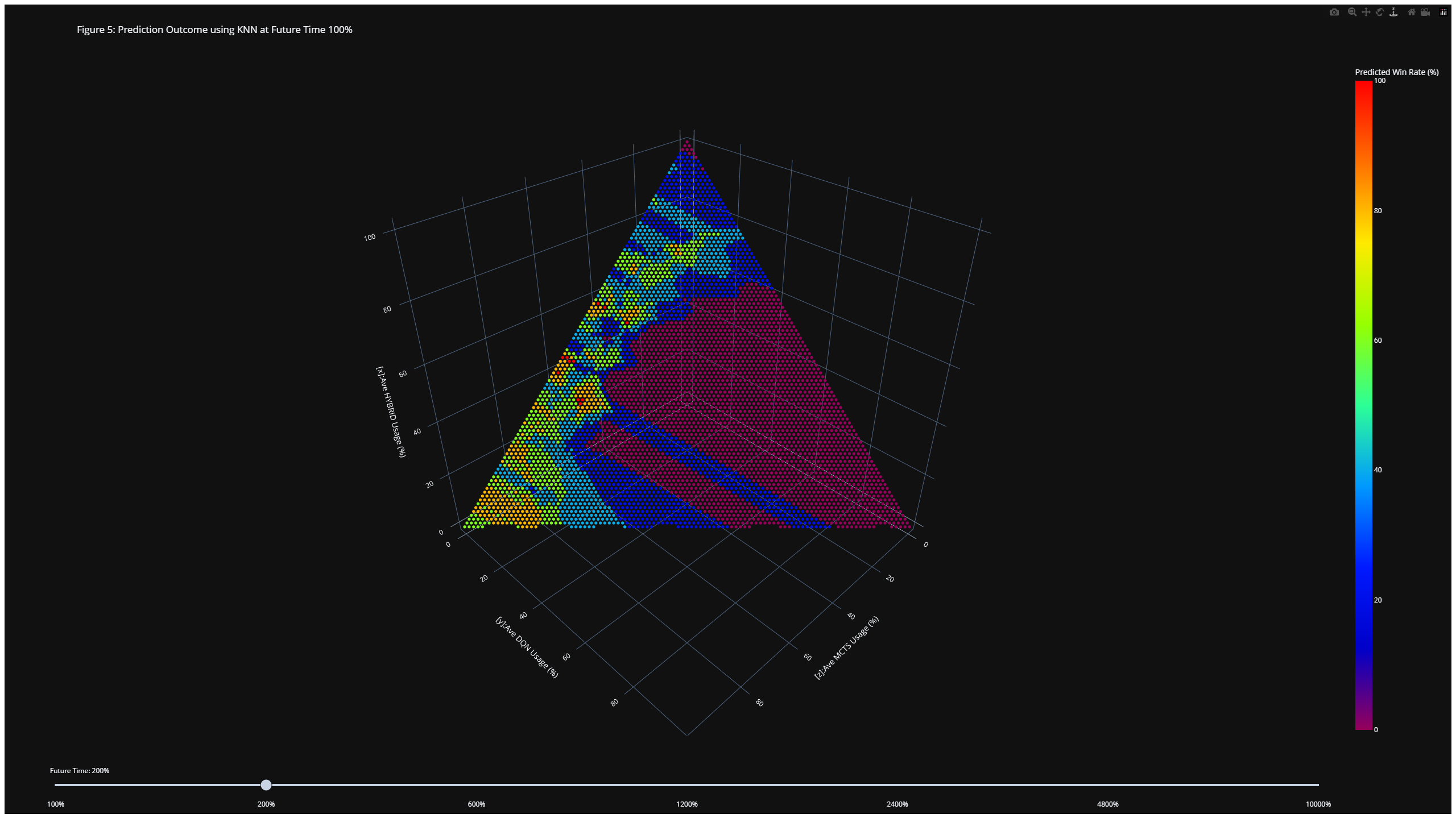

- Hybrid Architecture: Seamlessly integrates DQN for rapid state evaluation with MCTS for robust action selection, achieving a balance between speed and strategic depth.

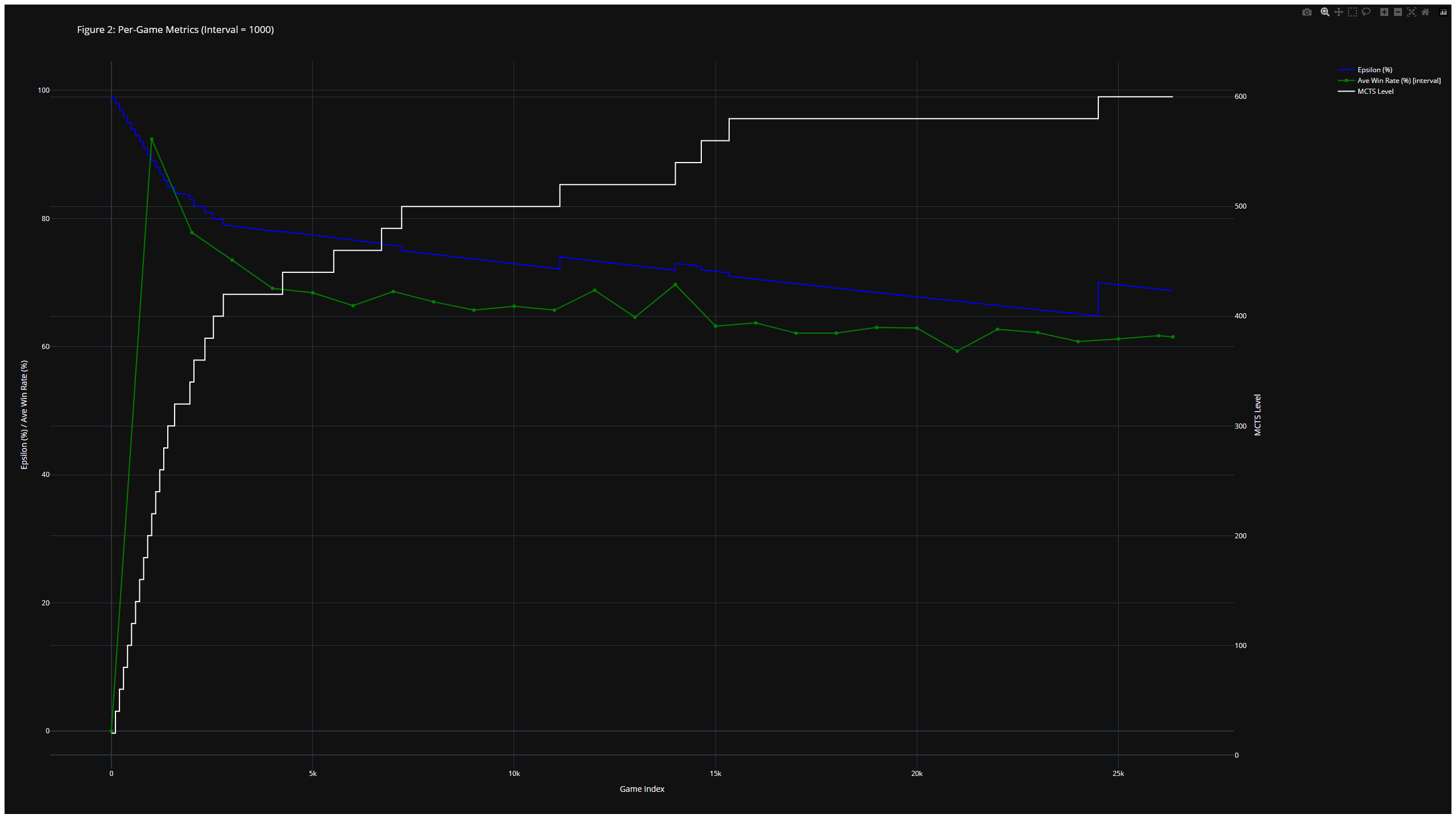

- Dynamic Leveling: Features an adaptive mechanism that scales MCTS simulations based on a fixed 90% win rate threshold, ensuring additional computational resources are deployed only when the agent consistently performs at a high level.

- Optimized Training: Boosts training efficiency by blending TD targets from DQN with MCTS rollouts, and accelerates MCTS simulations using CUDA.jit—yielding up to a 1000% speedup over CPU implementations.

- State Management & Checkpointing: Maintains dynamic training state in memory (tracking simulation levels, exploration rate, and episodes per level) with periodic disk checkpointing every 100 episodes to ensure reliable training resumption.